Keypoint object detection

For most of TestArchitect’s picture-handling actions, you actually have a choice of methods for comparing a tested image against a baseline. Keypoint object detection is supported by all but the check picture action. Unlike pixel-by-pixel comparison, which requires 100% equivalence between the pixels of a baseline and image under test in order to consider them a positive match, keypoint comparisons tolerate differences between compared images. Moreover, you can adjust the tolerance factor in keypoint comparisons.

Keypoint object detection is also the technique of choice when you are searching for the presence of a pattern, or object, within an image under test. This is especially true when the object in the test image can vary from the baseline to some degree, perhaps due to changes of scale, position, color or background. Moreover, the presence of multiple copies, or variations, of the baseline object may also be detected within the image under test.

As with pixel-by-pixel detection, keypoint detection begins with a baseline, or reference image. However, the baseline image in keypoint detection is used to generate a keypoint profile – a set of points determined by means of an algorithm applied to the image:

It is the keypoint profile of an image, rather than its bitmap, that is used as the reference for detecting the presence of the baseline image in the picture under test presented by an AUT. Instead of a baseline picture, you can think of the keypoint profile as defining a baseline object.

You can think of baseline definition as being a three-step process when it comes to the keypoint detection method:

- The first step is the same as for pixel-by-pixel comparison: you capture an image, generally with the Picture Capturing Tool, and store it in a new or existing picture check as a baseline picture.

- Next, you have TestArchitect apply the SIFT algorithm to generate keypoints for the image, which is usually a fairly instantaneous step.

- The third step is the “training” process, in which you essentially mold the keypoint profile to define a baseline object that best fits your needs. This involves a number of sub-steps, and is often a highly iterative process, in which you remove and/or add keypoints to the active keypoint profile. In addition, during this process you set a similarity threshold (Min accuracy (%)). This threshold specifies how close any given region of an image under test needs to be to the baseline object to be considered a match.

Step 1: Capturing a baseline picture

Use the Picture Capturing tool or the check picture action to capture a baseline picture. You may also simply work with an existing baseline picture in a shared or regular picture check.

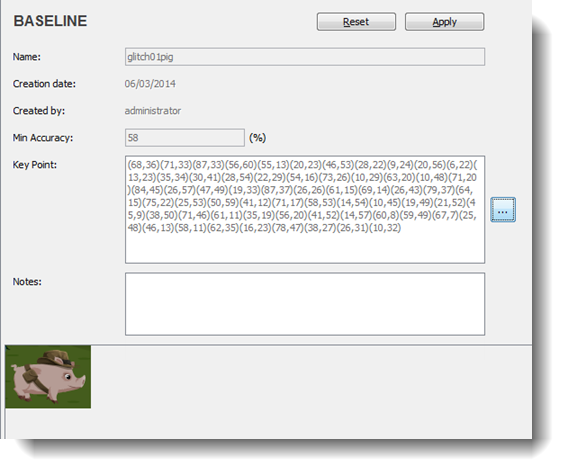

For example, let’s say that we have the following baseline picture, taken from a game, already stored in a picture check:

and that we are interested in testing for the presence of similar images of the fedora-wearing pig within other bitmaps at certain points in the automated test. We can certainly use this existing baseline picture, but because it’s best practice to limit the baseline picture to the object of interest, we capture a new baseline, limiting the area to just that surrounding our object of interest:

Step 2: Generating the keypoints

The next step is to open the baseline picture of the pig in TestArchitect’s Key Points Modification Tool. (This is done by pressing the Browse  button next to the Key Point box for the baseline picture.) This causes TestArchitect to immediately generate keypoints for the entire image:

button next to the Key Point box for the baseline picture.) This causes TestArchitect to immediately generate keypoints for the entire image:

The keypoints are initially purple, indicating that they are inactive, or unused. The upcoming configuration session is used to determine which of those keypoints should be made active.

Step 3: Configuring the baseline

As mentioned, this is often a highly iterative process, the intention of which is to configure the baseline object, and the associated similarity threshold, so as to best ensure that keypoint-based picture handling actions that use this baseline will find what you want them to find, and not have any “false positives”.

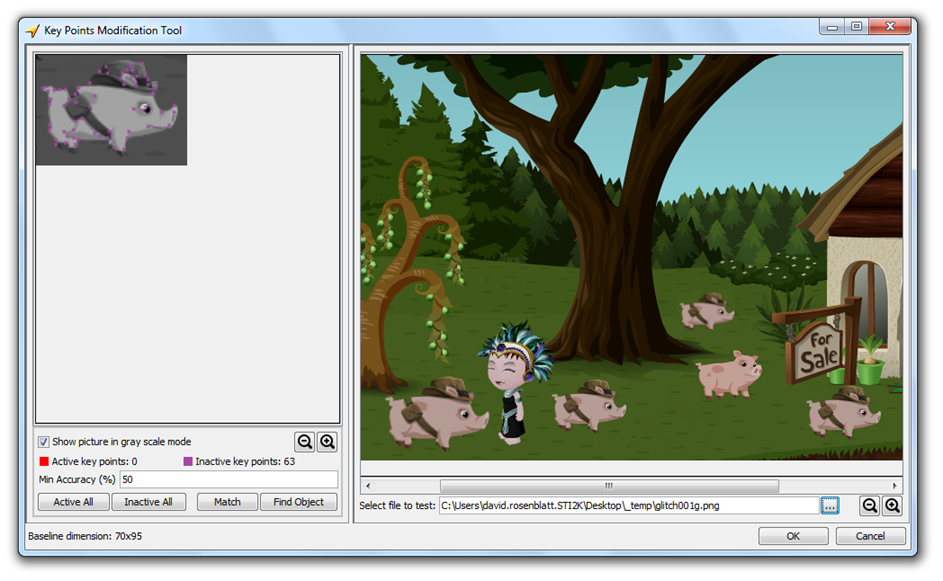

You begin by loading a training image into the Key Points Modification Tool. This is generally a picture previously captured from the AUT and saved as a .png file. Naturally, it should be either identical or similar to pictures that the AUT is expected to produce when tested with this baseline.

Note that the fedora-wearing pig appears in the above image four times, at differing scales. During tests, we want our picture-handling functions to detect all of them. We do not want anything else to be mistaken for a fedora-wearing pig, including the hatless pig.

buttons, and the Show picture in gray scale mode check box. Zooming makes it much easier to select or deselect keypoints, while displaying the image in gray scale makes it easier to see the points.

buttons, and the Show picture in gray scale mode check box. Zooming makes it much easier to select or deselect keypoints, while displaying the image in gray scale makes it easier to see the points.Active keypoints, as mentioned, are ultimately used to define the keypoint profile of your baseline object, while the inactive keypoints will have no effect. At this point, the iterative process involved in defining a baseline object has three variables that you can work with:

- Selecting and deselecting active keypoints: You can turn keypoints on and off individually or in groups, while testing the effectiveness of your current active keypoint set in making accurate matches;

- Varying the Min Accuracy value, again to optimize accuracy;

- Varying the training image (that is, opening other .png files), to test your settings against various other images that will be encountered during actual testing.

Adding & removing active keypoints: Keypoints may be toggled between their inactive and active states by clicking on them individually, effectively adding or removing them from the current keypoint profile. You can also drag your mouse over the image to select a rectangular region, then click either Active All or Inactive All to affect all keypoints in the selected area. Without a region selected, the Active All or Inactive All buttons pertain to the entire image.

Note that, when in Match mode (discussed in a bit), you can activate or deactivate baseline keypoints via the training image, again either individually or within selection rectangles. (That is, you can turn keypoints on or off in the training image to have their corresponding keypoints in the baseline activated or deactivated.) The training image in unaffected. Instead, the best-match correspondence lines connecting the two images determine which baseline keypoints are affected.

A keypoint in the baseline image can have one of three colors:

purple:

Indicates the keypoint is currently inactive (that is, not included in the keypoint profile).

red:

Designates the keypoint as currently active (included in the keypoint profile).

green:

Keypoint is currently selected.

Matching: Matching is the process of determining best keypoint matches between the current baseline object and the training image, and displaying those matches. It is intended as an aid in letting you determine which points to include in your keypoint profile (that is, active keypoints).

With one or more baseline keypoints active, click the Match button. TestArchitect responds by calculating all the keypoints in the training image. Then, for each active keypoint in the baseline image, it determines the closest match in the training image, based on the contents of each keypoint descriptor, and renders a gray best-match correspondence line between each associated pair of keypoints.

With the lines displayed, you can decide which baseline keypoints to keep active, and which to deactivate. The figure below, for example, suggests two active keypoints that might be good candidates for removal.

You should note, however, that Match mode by itself has limited utility. Three factors should be considered:

- First, for any given baseline keypoint, Match mode can display only the single best match. But in fact, when the keypoint detection system is employed to find objects, it does not restrict itself to single best matches. For each baseline keypoint, a list of best matching keypoints in the image under test is generated, with each such point given a ranking (best match, 2nd best match, etc.). The algorithm for object detection looks at all, or many, of the points in each list.

- Similarly, whereas Match mode only allows for a one-to-one correspondence between baseline and training image keypoints, keypoint-based object detection looks for the presence of more than one copy (or variant) of the baseline object in the image under test. Hence, object detection allows for a one-to-many relationship between the keypoints of the baseline and tested images.

- Match mode determines best matches in a context-free manner, whereas object detection considers best matches in light of other matches in the vicinity. For example, consider the lower-right mismatched keypoint in the figure above. The object detection process is very unlikely to erroneously detect an object associated with that keypoint, because it is very doubtful that it will find a set of close-matching keypoints for other parts of the fedora pig in the vicinity of that point.

When in Match mode, the Match button changes to Clear. Click Clear to remove the correspondence lines and exit Match mode.

Object detection: With your tentative settings for active keypoints established, click Find Object. This activates keypoint-based object detection, using your current keypoint profile, along with the similarity threshold set in the Min Accuracy (%) box, to find copies, or variants, of the baseline object. The process generally takes a few seconds, during which a progress bar appears at the bottom of the dialog box:

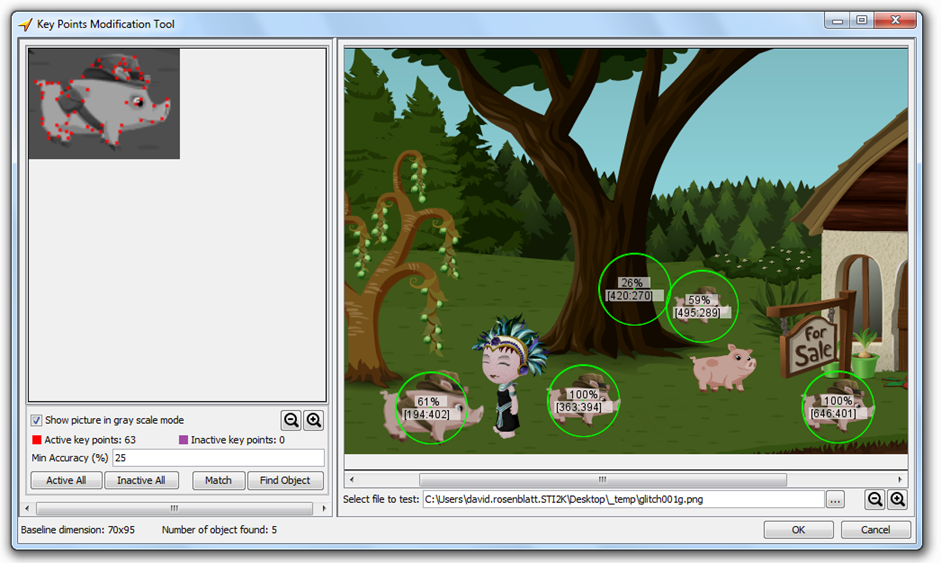

Upon completion of object detection, the training image is overlaid with circles indicating where TestArchitect found matching object(s). Inside each circle is a label indicating its degree of similarity with the baseline object, and another label specifying its x,y location.

Note that, as seen above, we have successfully detected all four of our fedora pigs, despite their size variations, and successfully not had a false positive with the hatless pig. We do, however, have one false positive, with the tree. But note that it has a similarity index of only 26%, whereas the valid hits all have similarity indexes of 59% and up. Hence, to avoid falsely detecting the tree, all we need do is raise the value of Min Accuracy (%) to a level above 26%.

Completing baseline configuration

Once you have tweaked everything to your satisfaction (established an active keypoint set, set a similarity threshold in Min Accuracy (%), and tested your settings with one or more training images), click OK in the Key Points Modification Tool. You are taken back to the associated baseline tab, where you can see that your settings for Min Accuracy and the active keypoint set (Key Point box) have been recorded: